Resonating with the vibes

Generative AI models and agent based systems are changing the way we interact with technology. "Vibe coding" has become a viable tool for speeding up the development process, and much like the IDE taking over from text based editors, technologists are experimenting with what works, and what doesn't to find the best use of the new tools.

Every few weeks there is a new major product release, either from one of the closed models (e.g. Claude from Anthropic) or in the Open Source space (e.g. Github CoPilot). At afrolabs we have been following these developments with great interest. Looking for what works for us and other developers, and how we can incorporate them into our ways of working and the projects we develop.

Vibing to Production Readiness

This project began with the GM of amazee.io (Michael) trying to figure out if an idea was viable. He used Cursor to bring up a proof of concept using modern technologies in just a couple of weeks. Once he determined that the product stood a chance of working the way he wanted, he needed full time development on it, with a focus on quality and problem solving. Enter afrolabs, and my work on this project.

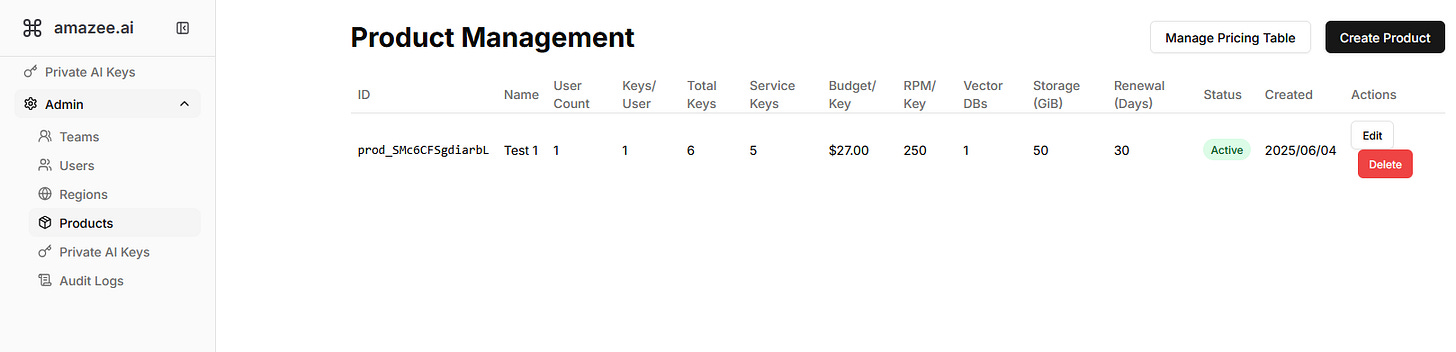

In March 2025 afrolabs entered into a development partnership with amazee.io to productionise a "vibe coded" proof of concept AI provider for Drupal AI, the result is amazee.ai. Offering LLM keys which work across many models and providers with minimal setup and a vector database running PGVector, amazee.ai integrates seamlessly into the Drupal AI module allowing developers to get up and running with just a couple of clicks.

I'll willingly admit to early scepticism about AI coding agents and coding assistants. I tried some of the early ones, and they were not quite as capable as I would have liked. This project gave me an opportunity to build a new appreciation for the power that they give, as well as some of the nuance that comes from LLMs. Given the project had been built in Cursor to begin with, continuing that process seemed natural. The project being Open Source means there are not major privacy concerns with the LLM learning off the code. The direct integration with Cursor incentivises learning to work with the agent, rather than just "turning it off when it get's annoying". I can (and do) still do that, but I also picked up some new techniques to make the agent an effective productivity booster.

Tuning In

Let us consider working with the AI like building a guitar. A taut string will make a sound when plucked, although that sound will be somewhat arbitrary. Adding a second string without tuning will often cause an unpleasant dissonance. Neither sound will travel particularly far (thank goodness) without building a resonance chamber. In the same way, coding agents on their own may be able to make something new, but without tuning, and building a resonance chamber, that product isn't going to go very far.

Despite (because of?) the advances in AI agents, software practices aren't going anywhere. When it comes to users, we all still want the same thing out of the products we build and use. We want reliability, consistency, responsiveness, and most of all functionality, we want them to do what they say they will do.

Tuning the AI assistant is not out of reach of the lay person, much as building a guitar is not a pre-requisite for playing it. However, much as the design and build of a quality guitar requires a professional luthier, an unchecked LLM is unlikely to develop software which meets the non-functional requirements of the project. Security, efficiency, and readable code are not the LLM's primary concern. Testability, and modularity are secondary to "it does a thing". Thankfully, we know how to work through these problems. Many junior developers, no matter how well intentioned, have the same blind spots. They also have many of the same strengths (with the added benefit of being much better at learning).

Testing for resonance

When building the resonance box of a guitar, a luthier will spend many hours building up exactly the right level of resonance in the body. Starting from a pattern, they will eventually rely on the precise sound each board makes when tapped to determine what they need to do next.

Similarly, one of the most effective tools I found for keeping the AI honest was building a comprehensive test suite. The project was started using pytest, and so I continued with that rather than migrating to a more behavioural framework, but that never stopped me from applying those same principles to building the tests. One of the prompts I have used frequently looks something like this:

Add a test case:

GIVEN <some base structure>

WHEN <method under test is called with certain parameters>

THEN <my expectation>Depending on the direction of the wind, the model under the hood, and the context of the previous conversation, the AI may even include those requirements in a docstring in the test. The main benefit, though, is that I am able to use behaviour driven thinking to define what the code should do.

As my test suite became more complex, I could use the AI assistant to make it easier to digest. List all the tests regarding key deletion gives me a deep-linked table of contents for a certain category of tests. I also used list all the tests in this file. This helps me to find missing cases, and use additional testing to reproduce bugs (and then fix them). Taking that a step further, I could ask go through all of these tests, and find any duplication. After each run of the tests, if anything failed, I could simply paste the pytest output into the prompt, and the AI would work through possible solutions.

This is where it begins to get tricky. There are certain classes of error which the AI is not good at resolving. It also requires direction on whether the test or the implementation is correct. We need to tap the board and listen, not just rely on the pattern. Sometimes you get a result which causes your test to fail, and you realise that it is because you put in the expectation wrong. Other times you are following test driven development, and you want to now update the implementation to meet the new expectations. This is where it is good to treat the AI as a junior developer. Prompting with Update the implementation so that this test passes primes it to know where to make changes. Rejecting a change and telling it the error is not in that piece of code, rather look here can save a lot of arguing with a machine. Some errors are also just quicker to fix by hand. That one weird SQLAlchemy issue that you know how to solve? Maybe just quickly fix it.

Resonating

There is a lot more to go into when it comes to the effective use of AI coding assistants. I could talk about building new APIs, integrating with third parties, infrastructure as code, and full RED monitoring. For now, let us accept that they need a fair amount of oversight, but they really can reduce the time-to-market for a product. The trick is to find the right balance, as with any new tool. I still use vim, cat, grep, and less all the time. I also use a fully featured IDE, and now AI assistants. I pick the right tool for the job I am doing in the moment.

Building in the right feedback loops can provide resonance in the system. Prototyping is one of the most important aspects of the beginning of a project. Being able to "hack together" a proof of concept in a couple of days, and get it working reliably enough for customer demos in a couple of weeks? That's a game changer. For a single engineer to build a full production system, with monitoring, dashboards, security review, and put it in the hands of real customers in a few months? That level of stability is all we have ever wanted. The assistant speeds up the learning of new systems and frameworks. It allows a backend engineer the necessary frontend skills to put a façade over an API.

I have found that the sharpest edge on integrated coding assistants is in the integration. Having the AI interrupt my flow and hijack the tab and esc keys drives me up the wall. If it were more closely integrated with IntelliSense I might find it more intuitive to use. The technology continues to evolve, and in time it may be that we are so used to the agents suggestions that we don't know what to do without them. It is still important to validate the approach to solving a problem, but allowing the machine to take on the repetitive and boring tasks will allow us to build and iterate faster than ever.